With the exception of the occasional “Are you happy to continue with installation?” type pop-up message, computers haven’t classically cared much about how we feel.

That’s all set to change with the arrival of affective computing, the development of systems and devices that are able to recognize, interpret and respond accordingly to human emotions. With modern artificial intelligence breakthroughs having given us machines with significant IQ, a burgeoning group of researchers and well-funded startups now want to match this with EQ, used to describe a person’s ability to recognize the emotions of those around them.

“Today’s A.I. systems have massive amounts of cognitive capability, but there’s no emotional intelligence in these technologies,” Gabi Zijderveld, head of product strategy at Affectiva, told Digital Trends. “Many of our interactions with A.I. systems are currently very transactional, and often superficial and ineffective. That’s because these systems don’t truly understand how we’re reacting to them, and are therefore unable to adapt to how we’re engaging with them. We think that’s a fundamental flaw in technology today.”

Welcome to the world of affective computing, a heady blend of psychology and computer science. Based on the idea that something as ephemeral as emotion can be captured and quantified as its own data point, it seeks to create technology that’s able to accurately mine our emotions. In doing so, its proponents claim that it will change the way that we interact with the devices around us; a transformation that, in just a few years, will be as unimaginable to us as using a computer with a graphical interface.

Boosters promise a plethora of exciting new applications and interactions. We’ll get computers which truly understand us on an emotional level. As for what’s asked of us in return? Simple: A new level of surveillance that aims to act on our innermost thoughts.

All of which leads us to the inevitable question: Exactly how should we feel about machines that know how we feel?

Understanding all things human

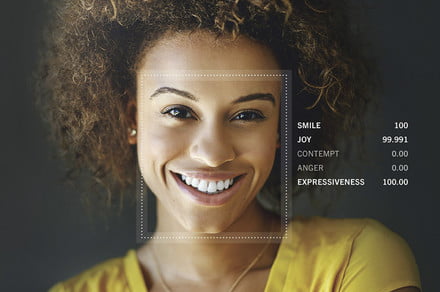

“We’ve developed what we call human perception A.I.,” Zijderveld explained. “It’s technology that can understand all things human. We don’t use any hardware sensors. We’re software only, and that software is designed the human face and voice to understand emotions, expressions, and reactions.”

When it comes to affective computing, perhaps no other company is quite so well known as Affectiva. Growing out of MIT’s pioneering Media Lab, the startup was founded a decade ago with the goal of creating emotional measurement technology in a sphere of domains. Since then, the company has gone from strength to strength; riding a way of increasingly connected devices and A.I. firepower. Co-founded by current CEO Rana el Kaliouby and Rosalind Picard, Affectiva now employs more than 50 people. This month, its employees had a party to celebrate its tenth birthday. It also took the opportunity to announce its latest funding boost — $26 million — to join the $34.3 million it has previously raised from eager investors.

[youtube https://www.youtube.com/watch?v=iQxS9Eq-hms]

“By activating the camera in any type of device, we can look at how people react to digital content and measure, frame by frame, what the responses are,” Zijderveld said. “If you do that over a large population, you can get quite meaningful insights into how people are engaging with content. Big brands can then use these insights to optimize advertising, story placement, taglines and so on. Research has shown that this is a very scientific and unbiased way of assessing engagement.”

Advertising is just one of the areas that Affectiva has developed technology for. All of these, however, are governed by the same central idea: namely, that machines are much better than people when it comes to recognizing emotion.

Emotion tracking all over the world

As with many pattern recognition tasks, A.I. can be vastly superior compared to humans when it comes to reading the subtle emotions on a person’s face. For example, how much emotion do you think you display when you’re watching TV? In the event of a big game or a satisfying death on Game of Thrones we might expect the answer to be “quite a lot.” But that’s not necessarily the case when, say, you’re half-watching a shampoo commercial or a local news item. It’s here that tools like Affectiva’s can come in handy, by searching for only the tiniest hint of a microexpression that even the subject may not be aware of.

People in the States get more expressive when watching something in a group, whereas in Japan they will dampen their emotions.

“People are actually quite expressive, although it is nuanced,” Zijderveld continued. “To capture that subtlety, we take a machine learning or deep learning approach. That’s really the only way that you can model for that type of complexity. More traditional heuristic models are just not going to work.”

To train its deep learning models, Affectiva has analyzed, to date, 7.8 million faces in 87 countries. This has given it ample training data to develop its models, as well as allowing it to observe some of the differences in how emotions are expressed around the globe.

This is, unsurprisingly, complicated. Zijderveld explained that, while the broad strokes of emotions are more or less universal, the way people express them differs tremendously. Intensity of expression differs by culture. So does the way men and women express emotion. Women are typically more expressive than men, although the amount varies depending on geography. In the U.S., women are about 40 percent more expressive than men, whereas in the U.K. men and women are evenly expressive. Emotion differs depending on group size also, and this also differs depending on location. People in the States get more expressive when they are watching something in a group. In Japan, they will dampen their emotions in a group setting.

But Affectiva just doesn’t stop at microexpressions. Its technology can also analyze the way that people talk, looking for what Zijderveld calls the “acoustic prosodic” features of speech. This refers to things such as tempo, tone and volume, as opposed to the literal meaning of spoken words.

Affectiva CEO Rana el Kaliouby Affectiva

“We did this because we wanted to start delivering what are called multi-modal models, meaning models that look at both face and voice,” Zijderveld said. “We believe that the more signals you can analyze, the more accurate you can be with your prediction of a human state.”

As more and more data is gathered, from visual information about users to heart rate data to contextual information, it will become easier than ever to build up an accurate image of a person’s emotional state at any one moment.

It all starts with Darwin

As with many technological pipe dreams that are only now starting to become feasible, it is easy to assume that the goal of scientific quantification of emotion has not existed for long. In fact, it’s quite the contrary. Efforts to decode the face as a source of information date back at least as far as 1872.

“… The task of accurate emotion recognition was made possible by the active development of artificial neural networks.”

That was the year in which no less an authority than Charles Darwin published one of his lesser known books, The Expression of the Emotions of Man and Animals. That book detailed the exact minutiae of how emotions manifest themselves through facial contortions — such as kids’ weeping, which involved compression of the eyeballs, contraction of the nose, and raising of the upper lip. Fear, meanwhile, could be spotted through “a death-like pallor,” labored breathing, dilated nostrils, “gasping and convulsive” lip movements, and more.

It was a tireless catalog designed, as academic Luke Munn writes in Under Your Skin: The Quest to Operationalize Emotion, “to formalize [a] grammar of muscular movements and gestural combinations.”

These efforts were built on by other researchers following on from Darwin. In the 1970s and 80s, Paul Ekman and Wallace Friesen created and developed their Facial Action Coding System (FACS). The basis for much of the subsequent research in this area, these took Darwin’s ideas to a far more empirically precise degree than he could ever have imagined, by literally writing a code in which every tiny movement of the face could be described through so-called “Action Units.” For instance, sadness could be reported as 1+4+15 (Inner Brow Raiser, Brow Lowerer, Lip Corner Depressor.) Happiness, on the other hand, was 6+12 (Cheek Raiser, Lip Corner Puller).

The advent of modern computing systems opened up new possibilities these insights to be analyzed. The development of facial recognition technology, for instance, presented researchers with the ability to start encoding these observations into machines.

[youtube https://www.youtube.com/watch?v=I_03LsSHNgA]

Deep learning networks, which explore the relationship between inputs and outputs, let researchers develop systems which could not only link facial movements with certain emotions, but also seek them out in new subjects. It was here that Affectiva and other rival companies sprang to life.

“From a technological point of view, the task of accurate emotion recognition was made possible by the active development of artificial neural networks,” George Pliev, founder and CEO of emotion-tracking company Neurodata Lab, told Digital Trends. “Thanks to them, machines learned to understand emotions on faces turned sideways and in low-light conditions. We got cheaper technology: even our smartphones have built-in neural network technologies [today]. This allows to integrate emotion recognition into service robots, detect emotions using a simple webcam, or to quickly process data in the cloud.”

All the better to understand you, my dear!

How emotion tracking changes everything

But as impressive as these technologies are as technological showcases, do they really have fundamentally useful applications? After all, as Zijderveld acknowledged to us, technologies can only catch on if they truly add value to users’ lives. This is surely true as technology becomes more controversial. The more controversial the tech, the bigger the tradeoff we make by using it, and the more value it therefore needs to show to be worth the sacrifice.

Affectiva showcases it’s Facial Action Coding System (FACS) analysis of this scene from Interstellar in which Matthew Mcconaughey’s character emotionally reacts to seeing a video message from his family. Affectiva

The field of affective computing is not short of examples of how it could be used, however. Far from it; this is a field brimming with opportunity. Imagine, for instance, that the movie or video game that you’re playing could adjust its intensity based on your emotional responses. Perhaps a survival horror game notices that you’re not sufficiently anxious and cranks up the difficulty and scares to ensure that you are.

Or how about the classroom? As students increasingly rely on digital technologies for their schoolwork, a textbook could tell whether they’re struggling with a particular part of the curriculum and either inform the teacher, or automatically adjust how the information is presented to improve clarity.

“[We] found that happiness, sadness, and surprise were the easiest emotional facial expressions to detect … “

It could have enormous impacts for emotion tracking, too. We live in a world in which Apple Watches possess the necessary heart-reading tech to spot potentially life-threatening conditions like atrial fibrillation. But the way in which mental health data, such as a person’s changing mood over time, is recorded lags a long way behind. Scanning your face for emotional signifiers whenever you look at your phone could help track your mood on a daily basis, every bit as effortlessly as a pedometer measures the number of steps you take.

Similar technology will be applied in cars. What if a dash cam watched your every move and made decisions accordingly? That could be something as simple as spotting flushed cheeks on all passengers and deciding to crank up the AC accordingly. It could also be altogether more lifesaving, such as noticing that the driver is distracted or tired and suggesting that they pull over and rest. “In the last few years we’ve seen a tremendous increase in interest from car manufacturers,” Zijderveld continued. “It made us realize that there really is a big opportunity for using this technology for in-cabin sensing.”

Sample graphics of the methodology behind Affectiva’s in-car emotion tracking system. Affectiva

As with Affectiva’s original mission, it could additionally make advertising smarter. Right now, companies like Amazon use recommender systems based on the previous products you — and customers like you — purchased. But how about if it was possible to go further and make recommendations based on how you’re feeling? When it comes to spending money, all of us are dictated by our emotions more than we’d necessarily like to think. Emotion-tracking tech that’s able to be there to present the right product at the right time could, quite literally, be worth billions of dollars.

Still a way to go

All of the above applications are ones that are currently being explored. There’s still room for improvement, though. “Even among these — some emotions are still tough nuts for detection systems,” Neurodata Lab’s George Pliev said. “We have recently tested the most well-known emotion recognition algorithms, and found that happiness, sadness, and surprise were the easiest emotional facial expressions to detect, while fear, disgust, anger, and a neutral state were the most difficult for A.I. Complex cognitive states, hidden, mixed and fake emotions, among other things, would require analysis and understanding of the context, and this hasn’t yet been achieved.”

Multi-modal models, like those described by Affectiva, can help add more data points to the picture. But there’s certainly plenty that emotion tech companies can do in order to showcase that their technology is accurate and free from bias.

An example of real-world driver data collected by Affectiva Affectiva

There’s a deeper issue, too: one that involves our fears about technology mining our emotions. It’s the same concern that arises whenever a well-connected insider talks about the way that social networks are designed specifically to promote addictive behaviors on the part of users: giving us a short-lived dopamine rush at the cost of, potentially, longer-term negative impacts. It’s the same concern that arises whenever we hear about Facebook or YouTube algorithms that are predicated on pointing us toward provocative content designed to elicit some kind of emotion, no matter whether it’s “fake news” or not. And it’s the same concern that arises when we think about the anthropomorphization effects of personalizing A.I. assistants like Alexa to encourage us to bring surveillance tools into our own homes.

In short, it’s the fear that something irrational and primitive about our animal brain is exploited by brilliant psychologists turning their helpful insights into for-profit code.

Will this remain a bit part of the overall user experience, or will using a computer without emotion tracking one day seem unthinkable?

Zijderveld said that Affectiva works hard to address these challenges. It champions privacy, with its services all being opt-in by default.

“There are just certain markets with certain use-cases to which we will not sell our technology,” she said. “That was true even in the early days. The company at one point got an offer for a $40 million investment from a government agency that was only going to make the funding available if we would develop the technology for surveillance. This was at a time when [Affectiva] was struggling and was worried about being able to make payroll a few months out. Here we had an offer of $40 million on the table, but it was in violation [of what we believed as a company]. The co-founders walked away from it.”

The next ubiquitous tech

Even with the potential risks, it seems that there is enough enthusiasm for emotion-tracking technologies that the field will continue to grow. Along with Affectiva and Neurodata Lab, other startups in this field include the likes of iMotions, RealEyes, and others. The company Neuro-ID was founded based on research from Brigham Young University, suggesting that your mouse movements (!) can reveal your emotion.

Major tech giants are starting to muscle in, too. In 2016, Apple acquired Emotient, a San Diego-based company which also developed tech designed to categorize emotion based on facial expressions.

While for us the winter is almost over, it is coming to #Westeros. We analyzed the #trailer for @HBO’s #GoT #final #season using #Emotion #AI. And the top-3 emotions are… sadness (70%), anxiety (22%), and anger (3%)! https://t.co/tvflLHmTwW pic.twitter.com/Qht1FuiVPJ

— NeuroData Lab (@NeurodataLab) March 7, 2019

Late last year, Amazon patented technology intended to let Alexa monitor users’ emotions based on the pitch and volume of their commands. In doing so, the retail leviathan thinks its A.I. assistant will be able to recognize “happiness, joy, anger, sorrow, sadness, fear, disgust, boredom, [or] stress” — and provide “highly targeted audio content, such as audio advertisements or promotions” accordingly.

Microsoft has gotten in on the act as well. As has Facebook, and many, many others.

With ads to sell, predictions to be made, or just users to be placated and kept happy, there’s plenty of potential upside here. The question is whether this will remain a bit part of the overall user experience, or whether using a computer without emotion tracking will one day seem unthinkable.

“This is going to be something that’s ingrained in the fabric of our devices, constantly working in the background,” Zijderveld assured us. “It’ll be similar to geolocation. Today, just about every app is location-aware. That wasn’t the case only 10 years ago. We believe it will be that pervasive. The market opportunity is tremendous.”

Editors’ Recommendations

- A.I. will cause a tectonic shift in human creativity, but don’t be scared yet

- How Avengers: Infinity War’s Oscar-nominated VFX team made Thanos a movie star

- A.I.-powered website creates freakishly lifelike faces of people who don’t exist

- What is Google Duplex? The smartest chatbot ever, explained

- Intel and Facebook team up to give Cooper Lake an artificial intelligence boost